Trump to Issue Unified AI Regulation Executive Order This Week

Washington, D.C. – In a move poised to reshape the regulatory landscape for artificial intelligence, President Donald J. Trump announced plans to sign a single, nationwide executive order establishing a unified rulebook for AI development across the United States. The aim is to streamline federal oversight, reduce regulatory fragmentation, and accelerate innovation by replacing a patchwork of state and local approvals with a centralized framework.

A central thesis: unified governance to sustain U.S. leadership Trump’s framing centers on national consistency as essential to preserving the United States’ competitive edge in the global AI race. Speaking to supporters and policy advisers, the president underscored the logistical and financial costs of a fifty-state regulatory mosaic. He argued that a single rulebook would eliminate duplicative processes and ensure faster deployment of AI technologies across sectors, from healthcare and manufacturing to finance and transportation.

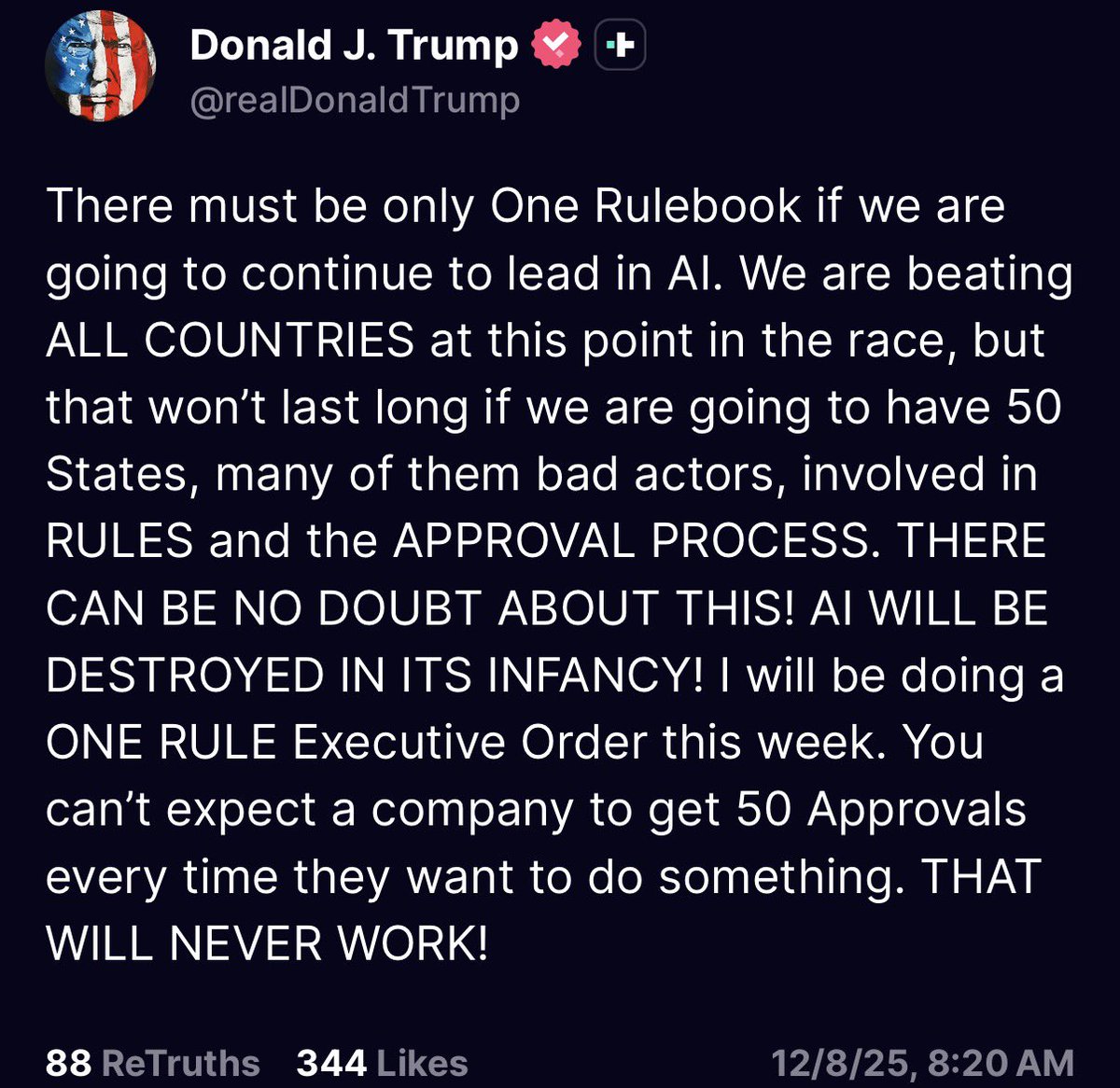

“There must be only one Rulebook if we are going to continue to lead in AI,” Trump said during a televised briefing. “We are beating ALL COUNTRIES at this point in the race, but that won't last long if we are going to have 50 States, many of them bad actors, involved in RULES and the APPROVAL PROCESS.”

The forthcoming executive order, according to aides briefed on the plan, is designed to preempt bureaucratic delays while maintaining essential safeguards. Proponents say a centralized framework would standardize safety, privacy, and accountability measures, reducing uncertainty for companies navigating the regulatory landscape. Critics, however, have cautioned that a top-down approach could overlook regional needs and stifle local innovation ecosystems.

Historical context: regulatory evolution in U.S. AI policy The proposal arrives after years of evolving AI policy at multiple levels of government. Federal policy has often oscillated between promoting innovation and enforcing guardrails, with agencies pursuing guidance on algorithmic transparency, safety standards, and consumer protection. States independently piloted their own AI-related initiatives, creating a mosaic of licensing regimes, ethical guidelines, and liability frameworks.

Historically, centralized policy efforts have aimed to balance national competitiveness with public trust. The shift toward a unified rulebook echoes past efforts to harmonize standards in other high-tech sectors, such as cybersecurity, data privacy, and autonomous systems. Proponents argue that coherence at the federal level is essential when AI technologies cross state lines and borderless supply chains complicate enforcement.

Economic impact: potential supply chain efficiencies and investment signals A key argument for the proposed executive order is that a single, nationwide standard would reduce compliance costs for technology firms, particularly startups and smaller enterprises that previously faced the burden of navigating 50 separate approval processes. By simplifying regulatory pathways, companies could allocate more capital to research, talent acquisition, and product development rather than duplicative administrative work.

Experts anticipate that streamlined oversight could expedite AI deployment in sectors with high value creation, such as healthcare diagnostics, industrial automation, and climate analytics. Improved predictability in regulatory expectations might attract private investment, both domestic and foreign, by reducing policy risk and creating a clearer long-term roadmap for AI product lifecycles.

Yet, the economic calculus is not one-sided. Critics warn that a rapid consolidation of authority could inadvertently raise compliance risk in some jurisdictions or concentrate influence in federal agencies, potentially marginalizing regional concerns, workforce disparities, and local innovation ecosystems that have thrived under more nuanced, state-level experimentation. The net effect on job creation, productivity, and long-term economic resilience will likely hinge on the final balance between speed, safeguards, and flexibility embedded in the rulebook.

Regional comparisons: how the plan stacks up against international peers Internationally, many economies pursue a hybrid model of national standards complemented by regional guidelines and industry-specific rules. The proposed U.S. approach would mark a more centralized posture relative to several peers that maintain robust federal standards while allowing state or provincial adaptations within defined parameters. In Europe, for example, the interplay between broad GDPR-based data protection norms and sectoral AI guidelines demonstrates a preference for comprehensive privacy protections alongside innovation-friendly provisions. China emphasizes state-led AI development with centralized oversight but also faces global pressure to strengthen transparency and governance.

If enacted, the U.S. unified rulebook would set a widely watched precedent for how to reconcile rapid AI advancement with public accountability in a large, decentralized federation. Observers expect policymakers to study the European and Asian models to calibrate safeguards such as algorithmic transparency, risk-based regulation, and accountability obligations for developers and deployers.

Key provisions expected to accompany the executive order Details circulating ahead of the signing suggest several pillars likely to define the central rulebook:

- Standardized safety and risk assessment protocols: A uniform framework for evaluating potential harms, risk categories, and mitigation strategies across AI systems, with emphasis on high-stakes applications such as healthcare, finance, and critical infrastructure.

- Transparent disclosure requirements: Clear expectations for model provenance, data sources, and limitations, enabling users and regulators to understand how AI outputs are generated.

- Accountability mechanisms: Clear lines of responsibility for developers, deployers, and operators, including auditability requirements and remedies for harms or biases identified in AI systems.

- Privacy and data governance: Consistent privacy safeguards and data-use constraints designed to protect individuals while supporting responsible AI innovation.

- Compliance and enforcement: A centralized regulatory body or interagency framework with defined inspection powers, penalties, and oversight processes to ensure uniform adherence across states.

- International collaboration and export controls: Provisions addressing cross-border data flows, technology transfer, and alignment with global AI governance trends to maintain competitive advantage while managing national security considerations.

Public reaction and industry sentiment Public reaction to the announcement has been mixed, reflecting broader debates about how best to balance innovation with safeguards. Advocates for rapid AI deployment welcomed the prospect of clear, predictable rules that reduce bureaucratic frictions. They argued that uncertainty and fragmented regulation have slowed product roadmaps and dampened investor confidence.

Consumer advocacy groups, by contrast, urged careful calibration of the policy to prevent overreach and to ensure robust protections for personal data, algorithmic fairness, and transparency. In industrial sectors such as manufacturing and logistics, stakeholders anticipate improved coordination across supply chains and faster adoption of automation technologies that can boost productivity and competitiveness.

Labor representatives have voiced concerns about the potential impact on skilled jobs and the need for ongoing training programs to prepare workers for an AI-enabled economy. Policymakers and industry analysts expect the unified framework to include provisions for workforce development, reskilling initiatives, and emphasis on ethical considerations in AI deployment.

Geopolitical implications: signaling strength in a competitive landscape On the world stage, a single United States AI rulebook would send a strong signal about national ambition and regulatory resolve. Supporters argue that a centralized approach can prevent policy gaps that allow uncontrolled or inconsistent development, which could pose risks to national security and public trust. Opponents, however, worry about the risk of policy inertia or misalignment with rapidly evolving technology trends if the federal framework lags behind market innovations or regional needs.

Analysts note that alignment with allied nations on AI standards could enhance interoperability in cross-border projects, reduce friction for multinational enterprises, and support coordinated responses to geopolitical challenges arising from AI-enabled capabilities.

Implementation timeline and next steps The administration intends to issue the executive order later this week, with a transition period during which federal agencies, regulators, and industry stakeholders would collaborate to operationalize the rulebook. The process is expected to involve public consultations, interagency coordination, and input from state officials, technology companies, academic researchers, and civil society groups.

Key milestones likely include:

- Finalization of the rulebook’s risk classifications and safety protocols.

- Establishment of a centralized oversight mechanism or interagency governance structure.

- Publication of guidance on data privacy, transparency, and accountability measures.

- Phase-in periods for different AI domains, prioritizing high-impact sectors.

- Ongoing performance metrics and mechanisms for regulatory updates as technology evolves.

Public safety and ethical considerations Beyond economic implications, the unified rulebook aims to address public safety and ethical concerns associated with AI deployment. Ensuring that AI systems operate reliably in critical contexts—such as medical diagnostics, emergency response, and essential infrastructure—remains a priority. Safeguards against bias, discrimination, and misinformation are expected to be integral to the framework, with ongoing oversight to monitor emerging risks.

Industry observers also highlight the importance of adaptable standards that can respond to breakthroughs in AI research, such as multimodal models, reinforcement learning in real-world settings, and increasingly autonomous decision-making. A balance between rigorous safety measures and flexible innovation regimes will be crucial to sustaining momentum without compromising public trust.

Historical lens: lessons from past technology regulation Looking back at previous tech policy moments, centralized regulatory efforts have often yielded both rapid harmonization and oversight challenges. When authorities provide clear, predictable rules, companies tend to invest more confidently, accelerating product development and market entry. However, over-centralization can risk stifling regional experimentation and disregarding localized labor dynamics or industry-specific needs.

The proposed approach seeks to draw lessons from those experiences by offering a unified baseline while allowing targeted adaptability in consultation with regional stakeholders. In this sense, the rulebook could function as a legal and ethical backbone that supports responsible innovation across diverse geographic and economic contexts.

Conclusion: a pivotal moment for American AI governance As anticipation builds around the executive order’s release, the AI governance landscape stands at a crossroads. The move toward a unified federal AI rulebook represents a deliberate attempt to harmonize standards, reduce regulatory friction, and maintain the United States’ leadership in a rapidly evolving technological frontier. The outcome will hinge on how well the administration negotiates speed, safety, and regional flexibility, as well as how effectively it engages industry, academia, labor, and the public in shaping a governance framework that is both robust and adaptable.

With the clock ticking on critical AI developments and international competitors advancing at a brisk pace, the forthcoming policy milestone could redefine the trajectory of AI innovation, investment, and governance for years to come. Stakeholders across government, business, and civil society will be watching closely as the unified rulebook begins to take shape, signaling not just regulatory reform but a broader moment of strategic recalibration for the United States in the age of artificial intelligence.