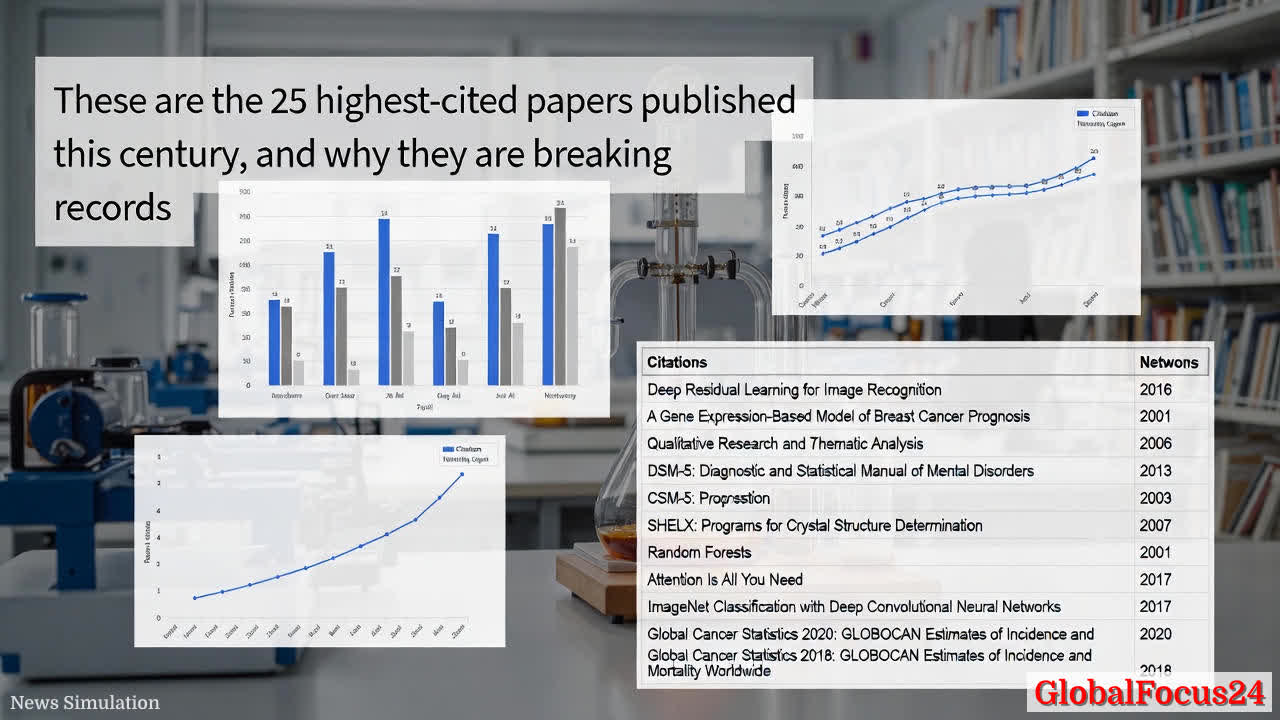

AI Methods Lead the Pack in 21st Century's Most-Cited Papers

In the last two decades, the cadence of scientific influence has leaned heavily toward artificial intelligence, data science, and health statistics. A comprehensive citation analysis now highlights the 25 research papers published since 2000 that have the highest number of citations across major academic databases. Read as a barometer of research momentum, the list reveals how foundational methods, transformative architectures, and global health statistics have shaped modern science and technology. The story is not just about numbers; it’s about how these works accelerated research across domains, from image recognition and genomics to psychology, cancer epidemiology, and software for data analysis. This article offers historical context, examines economic impact, and draws regional comparisons that illuminate the global diffusion of knowledge in the 21st century.

Historical Context: From Early AI Milestones to Deep Learning Dominance

The upper portion of the list is anchored by breakthroughs that redefined what machines can learn. At the top is a 2016 paper on deep residual learning for image recognition, which introduced ResNets. This architecture addressed a long-standing problem—training very deep neural networks without vanishing or exploding gradients—unlocking deeper models and more accurate image understanding. The ripple effects were immediate: improved computer vision in medical imaging, autonomous systems, and consumer technology, fueling both academic research and industrial innovation. The rapid adoption of residual networks catalyzed a broader shift toward deep learning as the default approach for perception tasks, setting the stage for subsequent advances in natural language processing, reinforcement learning, and multimodal AI.

Trailing closely behind is a 2001 method for analyzing relative gene expression data using real-time quantitative PCR. This technique became a mainstay in molecular biology and translational research, enabling precise quantification of DNA changes under various conditions. The methodological clarity and robustness of this approach made it a go-to standard in labs worldwide, underpinning countless studies in genetics, oncology, infectious disease, and personalized medicine. The enduring relevance of quantitative PCR metaanalyses demonstrates how foundational statistical methods can anchor progress across multiple disciplines.

A 2006 guide on using thematic analysis in psychology marks a pivotal moment for qualitative research. The structured approach outlined in this widely cited work provided researchers with a clear framework for interpreting qualitative data, enabling systematic coding, theme development, and interpretation of complex human experiences. Its influence extends beyond psychology to education, health sciences, sociology, and market research, underscoring the cross-disciplinary value of rigorous qualitative methods in an increasingly data-rich research environment.

Global health statistics also feature prominently, with editions of the Diagnostic and Statistical Manual of Mental Disorders (DSM-5) appearing among the most-cited works. The DSM-5’s role as a diagnostic standard for mental health conditions means its classifications, criteria revisions, and clinical implications reverberate through medical practice, health policy, and epidemiological research. While not a treatment guideline per se, its diagnostic framework shapes research design, clinical trials, and health statistics globally, contributing to consistency in reporting and comparability across studies and regions.

In the realm of structural biology and chemistry, the SHELX software suite for crystal structure analysis is another cornerstone. The suite’s longevity and versatility reflect the enduring importance of crystallography in drug discovery, materials science, and biochemistry. Researchers rely on these tools to interpret experimental data, build structural models, and validate hypotheses about molecular function, which in turn informs pharmaceutical development and chemical engineering.

Economic Impact: From Bench to Market and Public Health Outcomes

The economic implications of these widely cited works are substantial. Foundational algorithms and architectures in AI—such as random forests (2001), deep learning successors, and transformer models (Attention is All You Need, 2017)—have transformed not only scientific research but also industry economics. The efficiency and accessibility of algorithms like random forests lowered barriers for applied machine learning in business analytics, healthcare analytics, and environmental monitoring. Transformer models, which introduced a new era of language understanding, have reshaped sectors ranging from search engines and virtual assistants to content moderation and automated reporting. The economic ripple effects include productivity gains in R&D, accelerated time-to-insight for healthcare decision-making, and the creation of new markets in AI tooling and cloud-based AI services.

In health statistics, global cancer incidence and mortality reports provide essential inputs for public health planning and pharmaceutical investment. While not a single clinical intervention, accurate epidemiological data guide resource allocation, preventative strategies, screening programs, and the prioritization of research funding. Such reports influence policy decisions that affect healthcare costs, insurance coverage, and the broader economic burden of disease. The recurring emphasis on cancer statistics across multiple regions highlights the universal demand for robust data to steer health economics, research grants, and international collaboration.

Regional Comparisons: Where Impact Resonates Most

The reach of these works is truly global, though regional patterns emerge when considering adoption, funding priorities, and research ecosystems. North America and Europe have historically been centers for publishing high-impact AI and statistical papers, aided by substantial research funding, academic infrastructure, and strong collaborations between industry and academia. The prominence of deep learning and transformer research aligns with the late-2010s surge in tech company investment in AI, as well as open-source communities that democratized access to powerful models and tools.

Global cancer statistics present a more diffuse but equally important regional story. Countries with established cancer registries, standardized reporting, and robust healthcare systems contribute high-quality data that informs international estimates. Regions with growing epidemiological capacities—such as parts of Asia, Latin America, and Africa—continue to improve reporting accuracy, enabling more precise cross-country comparisons and more effective regional health interventions. This global data network supports multinational pharmaceutical companies, international health organizations, and regional policymakers working to reduce the cancer burden through early detection, vaccination, and targeted therapies.

Synthesis: Trends That Define Modern Research Priorities

Several themes emerge when examining these highly cited works in aggregate:

- Method-first breakthroughs: Foundational algorithms and statistical methods, such as residual networks, random forests, and quantitative PCR analyses, underpin subsequent innovations across disciplines. Their broad applicability makes them repeatable catalysts for progress.

- Data-centric science: The emphasis on large-scale data, whether in imaging, genomics, or health statistics, reflects a broader shift toward data-driven discovery. This trend has influenced how research questions are framed, how experiments are designed, and how results are interpreted.

- Interdisciplinary reach: Papers that introduce general-purpose tools or standards (e.g., thematic analysis, DSM-5) cross disciplinary boundaries, enabling researchers in diverse fields to adopt consistent methods and interpretations.

- Global health emphasis: The inclusion of comprehensive cancer statistics signals a sustained global priority on epidemiology, prevention, and health economics. Accurate data not only inform medical practice but also guide policy and investment decisions.

- Open accessibility and reproducibility: Many of the cited works underpin open research practices, shared datasets, and transparent methodologies. This openness accelerates replication, validation, and further innovation, reinforcing the cumulative nature of scientific progress.

Technical Deep Dive: Notable Entries and Their Derivative Impact

- Deep Residual Learning for Image Recognition (2016): Introduced ResNets, enabling much deeper networks without training instability. Impact: improved medical image analysis, autonomous vehicle perception, and large-scale visual recognition systems.

- Real-Time Quantitative PCR for Gene Expression (2001): Standard method for measuring gene expression changes with precision and speed. Impact: accelerated functional genomics, biomarker discovery, and tailored therapeutics.

- Thematic Analysis in Psychology (2006): Practical framework for qualitative data interpretation. Impact: enhanced rigor in qualitative research across psychology, education, and health sciences, supporting mixed-methods studies.

- DSM-5 (2013 edition): Unified framework for mental disorder classification. Impact: consistency in clinical diagnosis, research inclusion criteria, and health policy discussions.

- SHELX Software Suite (2007): Tools for crystal structure determination and refinement. Impact: advances in drug design, materials science, and structural biology.

- Random Forests (2001 introduction): Ensemble learning method with strong performance and interpretability. Impact: widespread use in bioinformatics, finance, marketing analytics, and environmental science.

- Attention Is All You Need (2017): Transformer architecture that revolutionized natural language processing. Impact: breakthroughs in language models, translation, summarization, and question answering.

- ImageNet Classification with Deep Convolutional Neural Networks (2012): Early landmark demonstrating deep learning's efficacy on large-scale visual data. Impact: spurred a surge in deep learning research and practical computer vision applications.

- Global Cancer Statistics (2020, 2018): Key epidemiological references for cancer incidence and mortality worldwide. Impact: shaping screening guidelines, funding priorities, and international health programs.

- U-Nets for Image Processing (2015): Architecture enabling precise biomedical image segmentation. Impact: improved tumor delineation, organ segmentation, and pathology analysis.

- DESeq2 (2014): Software for analyzing count-based RNA-seq data. Impact: robust differential expression analysis in transcriptomics, enabling insights into disease mechanisms.

- Cancer Hallmarks Review (2011): Conceptual framework for understanding cancer biology. Impact: guiding research strategies, therapeutic target discovery, and educational materials.

- ImageNet Database Paper (2009): Resource paper describing a foundational dataset that fueled deep learning progress. Impact: standardized benchmarks and a shared platform for model development.

A Practical Look at How Businesses and Institutions Use These Insights

- Research institutions leverage these methods to design experiments, analyze large datasets, and publish influential findings that attract funding and collaborations. The cross-disciplinary utility of techniques such as deep learning and statistical analysis supports research portfolios spanning healthcare, engineering, and social sciences.

- Industry adoption centers on AI-driven optimization, predictive analytics, and intelligent automation. Transformer-based models underpin modern search, chatbots, and content generation, while robust image analysis pipelines enable quality control, medical diagnostics, and surveillance applications under ethical and regulatory frameworks.

- Public health agencies rely on global cancer statistics and health indicators to allocate resources, set screening priorities, and track progress toward health outcomes. Data-driven policy decisions benefit from transparent methodologies and reproducible analyses.

Conclusion: A Continuum of Innovation and Collaboration

The most-cited papers of the 21st century collectively narrate a story of rapid methodological advancement, unprecedented data availability, and global collaboration. They demonstrate how a single methodological insight—whether a neural network architecture, an analysis technique, or a diagnostic framework—can cascade into broad, tangible improvements across science, medicine, and society. As AI continues to mature and as data infrastructures expand, the next generation of high-impact research will likely build on these foundations, further blurring the lines between disciplines and accelerating discoveries that previously lived only in theory.

Public interest in these developments remains high, reflecting broader shifts toward data-informed decision-making in everyday life. From medical breakthroughs to smarter software systems, the influence of these papers extends beyond academia, shaping how industries plan, invest, and respond to global health challenges. The ongoing dialogue between method development, empirical validation, and real-world impact will continue to define the research landscape in the years ahead.

Follow the evolving landscape of AI-driven science and health statistics as researchers, policymakers, and industry leaders collaborate to translate complex data into practical solutions that benefit communities worldwide.